背景

一直对容器化主机非常感兴趣,其对物理机的资源使用和租户的隔离型是非常有利的,可惜在国内IDC系统中,无论是魔方还是 Prokvm,还是延续着传统的VM 虚拟化。

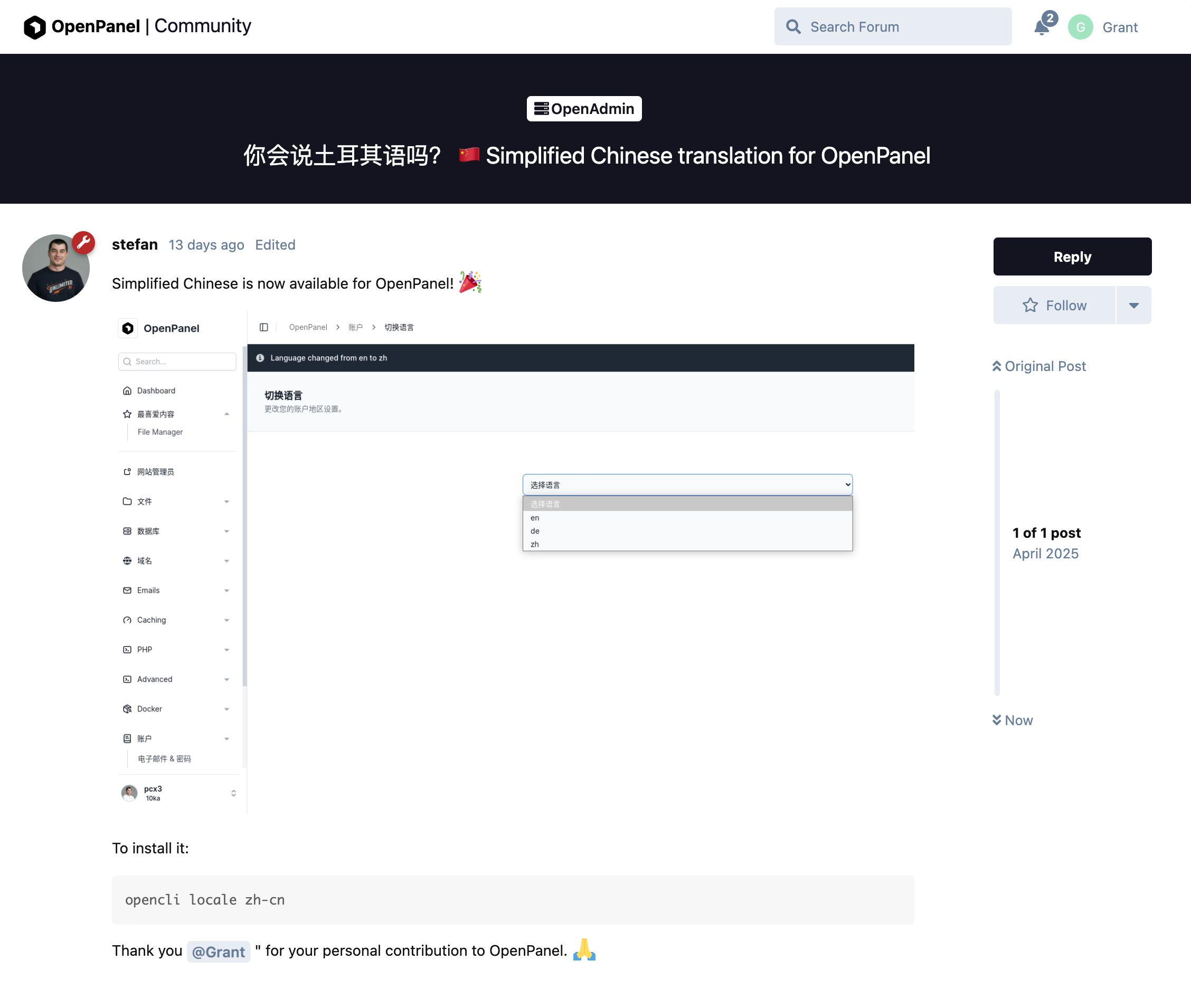

这不得不让我关注国外的主机面板,其中 OpenPanel 吸引了我的兴趣,虽然其并没有完全开源,属于半开源的状态,但是他的设计理念和我理想的几乎一致,怀着激动的心情,我尝试了一下,发现它目前还处在发展阶段,还有一些 BUG 没有解决,其次,就是它没有中文,我决定自己贡献一下中文翻译。

由于我的英语一向不太好,不能指望自己一点点的翻译,我决定使用大模型gemma3:12b进行翻译,为此,我写了一个脚本,专门读取他的标准文件,在我的本地大模型上进行翻译,采用了多进程(Python的多线程🐶都不用)安全翻译,代码如下:

import time

import multiprocessing

from functools import partial

import os

import random

from openai import OpenAI

from tenacity import retry, stop_after_attempt, wait_exponential, retry_if_exception_type

# 翻译函数,调用 OpenAI 接口将英文翻译为简体中文

@retry(

stop=stop_after_attempt(5), # 最多重试5次

wait=wait_exponential(multiplier=1, min=2, max=30), # 指数级退避重试间隔

retry=retry_if_exception_type((Exception)) # 任何异常都重试

)

def OpenPanel_text(text, api_key, base_url):

if not text:

return ""

try:

client = OpenAI(

api_key=api_key,

base_url=base_url

)

response = client.chat.completions.create(

model="gemma3:12b",

messages=[

{"role": "system", "content": "You are now a professional assistant for multilingual translation. My website needs multiple languages. I already have an English version, and I need to translate it into Chinese. Please ensure the translation is authentic and idiomatic, without changing the meaning. Also, any punctuation should remain consistent with the English version."},

{"role": "user", "content": f"You need to output only the translated content, without any other information. This is the English content that needs to be translated: {text}"}

],

temperature=0.3,

max_tokens=1000,

)

translated_text = response.choices[0].message.content.strip()

print(f'原始: {text}\n翻译后: {translated_text}\n---')

return translated_text

except Exception as e:

print(f"Error translating '{text}': {str(e)}")

raise

def parse_pot_file(pot_file_path):

with open(pot_file_path, 'r', encoding='utf-8') as pot_file:

content = pot_file.read()

# 将文件分割为每个翻译条目

blocks = content.split('\n\n')

parsed_blocks = []

for block in blocks:

if not block.strip():

parsed_blocks.append(('', block)) # 空块

continue

lines = block.split('\n')

msgid_value = ""

# 查找 msgid 行

for line in lines:

if line.startswith('msgid "'):

# 提取 msgid 值,假设它只在一行

msgid_value = line[6:].strip('"')

break

parsed_blocks.append((msgid_value, block))

return parsed_blocks

# 单个翻译工作函数

def translate_block(item, api_key, base_url):

msgid_value, original_block = item

if not msgid_value or msgid_value == '""':

return original_block

# 查找原始 msgstr 行并替换为翻译内容

lines = original_block.split('\n')

for i, line in enumerate(lines):

if line.startswith('msgstr "'):

# 翻译 msgid 内容

translated_text = translate_text(msgid_value, api_key, base_url)

# 替换原始 msgstr 内容

lines[i] = f'msgstr "{translated_text}"'

break

# 添加随机延迟避免API限制

time.sleep(random.uniform(0.5, 1.5))

return '\n'.join(lines)

# 使用多进程翻译 POT 文件

def translate_pot_file_multiprocessing(pot_file_path, output_file_path, num_processes=4):

api_key = "sk-XXXXXX"

base_url = "https://aiapi.xing-yun.cn/v1"

print(f"Parsing POT file {pot_file_path}...")

parsed_blocks = parse_pot_file(pot_file_path)

# 创建部分函数,设置固定参数

translate_func = partial(translate_block, api_key=api_key, base_url=base_url)

print(f"Starting translation with {num_processes} processes for {len(parsed_blocks)} blocks...")

translated_blocks = []

with multiprocessing.Pool(processes=num_processes) as pool:

# 使用 imap 保持顺序并显示进度

for i, result in enumerate(pool.imap(translate_func, parsed_blocks)):

translated_blocks.append(result)

print(f"Progress: {i+1}/{len(parsed_blocks)} completed")

print("Translation completed. Writing to output file...")

with open(output_file_path, 'w', encoding='utf-8') as output_file:

output_file.write('\n\n'.join(translated_blocks))

print(f"Translation saved to {output_file_path}")

if __name__ == '__main__':

output_dir = '/Users/wang/Desktop/openpanel-translations/zh_cn'

os.makedirs(output_dir, exist_ok=True)

# 根据CPU核心数设置进程数(留一个核心给系统)

cpu_count = max(1, multiprocessing.cpu_count() - 1)

translate_pot_file_multiprocessing(

'/Users/wang/Desktop/openpanel-translations/en-us/messages.pot',

'/Users/wang/Desktop/openpanel-translations/zh_cn/messages.pot',

num_processes=cpu_count

)结果

最后,毫无疑问,翻译的质量很高,我认为主要是gemma3能力确实比较强,大模型翻译之后,我仅修改了几处不合适的地方,其余的都是正确的🍻。